Inception Core Server is the foundational runtime behind the broader Inception project — a modular AI infrastructure designed around the belief that memory precedes intelligence.

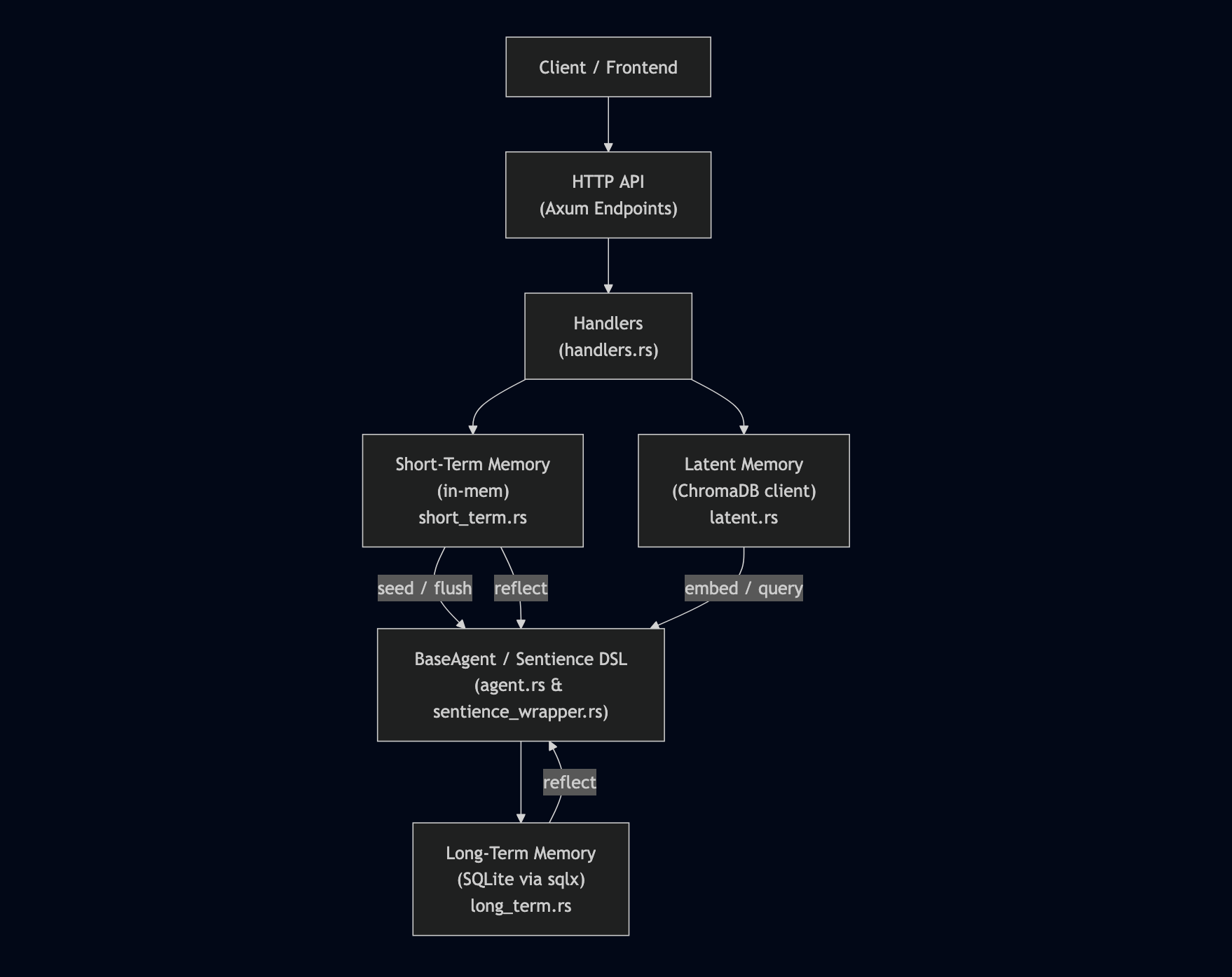

At its heart lies the MCP architecture: Model–Context–Protocol, orchestrating LLMs, contextual memory (short, long, latent), and a structured communication layer for cognitive agents.

Highlights

-

Modular Memory System:

short_term(in-memory HashMap)long_term(SQLite via sqlx)latent(vector memory via ChromaDB)

-

LLM Integration:

- Pluggable local or remote inference via REST

- Compatible with Mistral (GGUF /

llama.cpp) or remote APIs

-

Agent Runtime:

- One or more agents operate via a shared protocol layer

- Uses custom REPL DSL: Sentience

-

Structured Communication:

- REST API for memory read/write

- Latent memory embedding/query endpoints

- Agent chat + sentience execution

Why It Matters

This isn't just a wrapper around an LLM.

It's a cortex simulator — where agents coordinate over memory, not prompts.

The long-term goal is autonomy — emergent behavior from structured storage, not fine-tuned weights.

Every line of code is part of a larger experiment:

Can intelligence grow from the ground up if memory is structured like biology?

Graphical overview: agents (organs) operate over short/long/latent memory via the MCP protocol.

Architecture

src/icore/– MCP layer (model.rs, context.rs, protocol.rs)src/memory/– all memory backendssrc/agents/– agent scaffolding + DSL behavior loadersrc/api/– REST interface (Axum)- Docker setup for full LLM + Chroma + SQLite runtime

Project Status

- Core server (Axum + memory)

- REST API routes

- Docker dev + prod config

- ChromaDB support

- Sentience DSL integration

- Multi-agent protocol expansion

- Reflection-driven decision layer

Inception is not just a server — it's the brainstem of a synthetic mind.

Everything else grows from this.