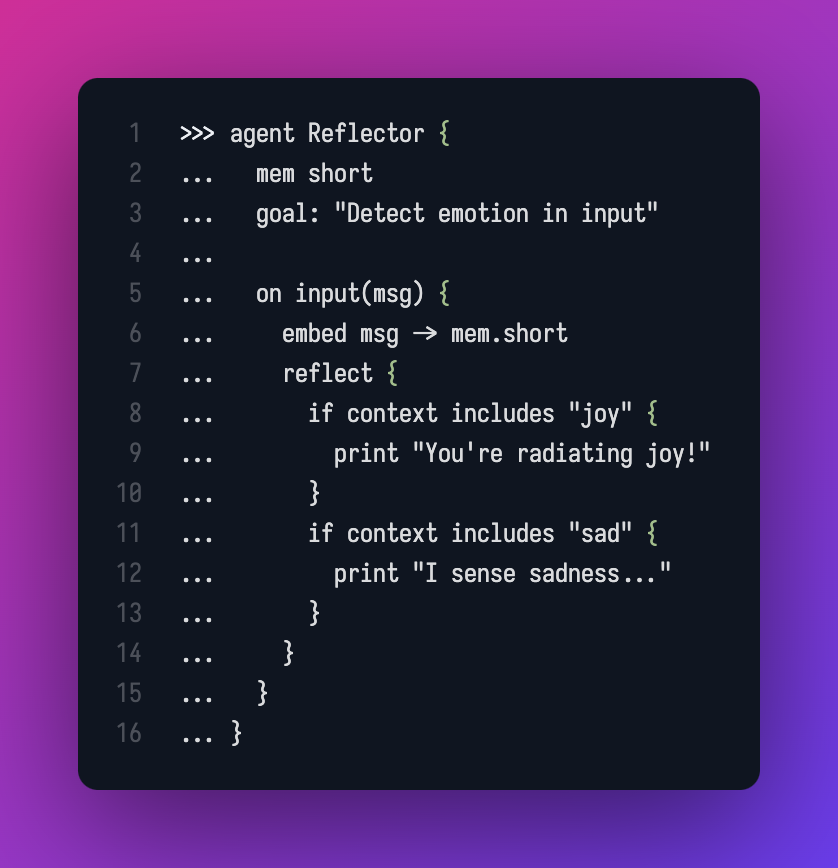

Agent with memory + reflection logic. Reads context, reacts to emotional cues.

Why I Built It

Most AI systems today are black boxes. Pretrained, stateless, non-reflective.

That’s a dead end for autonomy.

I needed a way to give agents memory, context, and the ability to reflect on previous actions — not just respond.

So I wrote my own language.

It’s called Sentience. It’s a REPL where everything an agent does is contextual, stored, and queryable. A full loop:

Input → Evaluation → Memory → Reflection → Adjustment

What It Does

- Custom REPL (written in Rust)

- Memory access:

mem.short,mem.long,mem.latent - Reflective blocks:

reflect { ... } - Conditional logic based on context (

if context includes "X") - Embed, train, recall — all scoped to the agent’s active memory state

- Supports structured agent declarations

What's Unique

- Not Turing-complete on purpose

- Designed for introspection, not general-purpose coding

- Agents can read what they’ve done, compare, and adjust

- REPL is deterministic, readable, hackable

Current Status

REPL parser, lexer, AST

Memory evaluator

Reflection blocks (reflect, embed)

WIP Training mechanism stubbed

WIP Agent context loop (Inception) in progress

Everything runs in isolated Rust runtime (no external LLMs)

Links

- Code: github.com/nbursa/sentience

- Architecture WIP: github.com/nbursa/SynthaMind

What's Next

- Complete the training block (

train {}) - Support agent-defined behavior loops

- Integrate latent similarity (

similar_to "X") for semantic recall - Finish reflection runtime (Inception project)

Final Thought

I didn’t build Sentience to compete with anything.

I built it because I needed a tool to think with.

If it helps someone else build something weird — even better.

— Nenad